To understand how embeddings work in NLP, we need to explore how machines represent human language. Embeddings are at the core of modern natural language processing (NLP), enabling AI systems to process and understand text with meaning.

What Are Embeddings in NLP?

Embeddings are numerical vector representations of words, phrases, or documents. These vectors capture semantic meaning, allowing similar words to have similar representations.

For example:

- “king” and “queen” would have vectors that are close in space

- “dog” and “cat” would also be near each other

How Embeddings Work in NLP

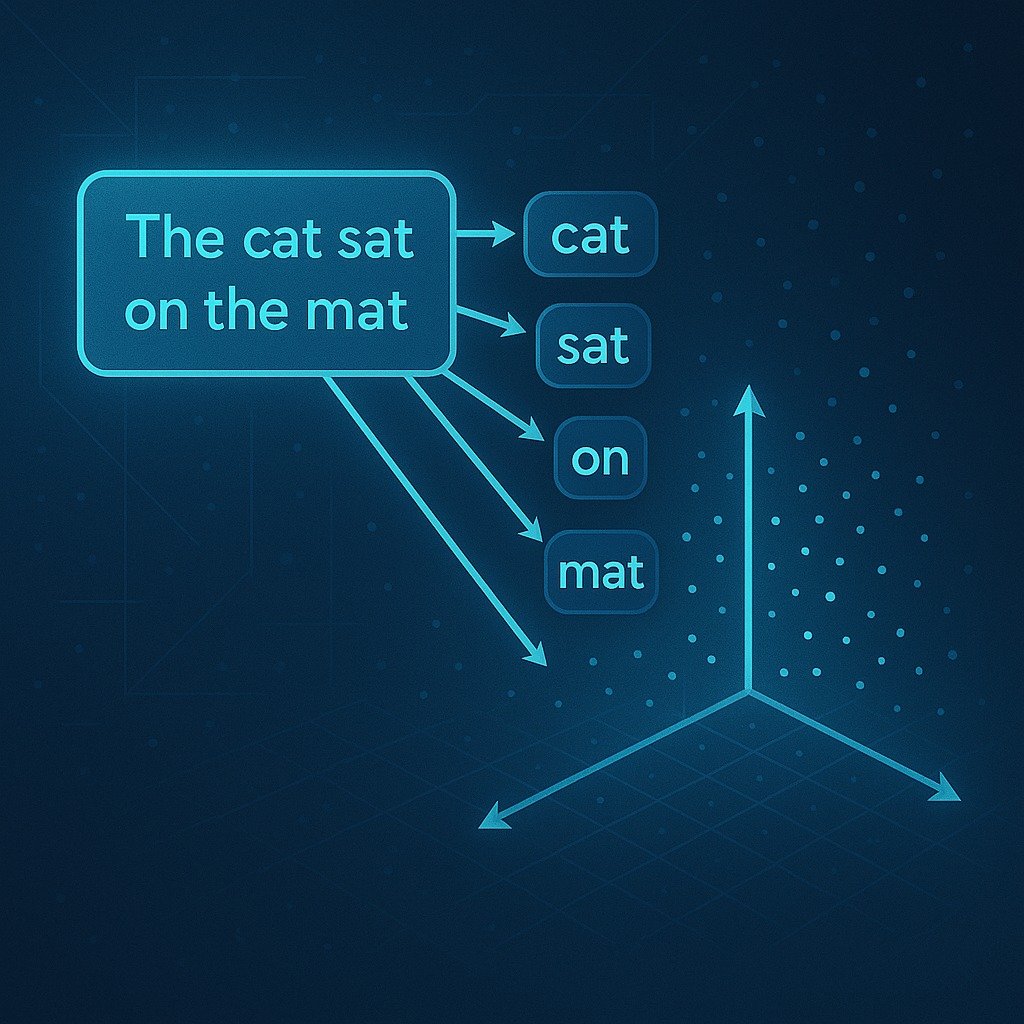

1. Tokenization and Input Representation

Before embeddings, text is tokenized into units (words or subwords). Each token is then mapped to a vector.

2. Vector Space Representation

The vector lives in a high-dimensional space. Relationships in this space reflect linguistic similarities and patterns. Closer vectors imply semantic closeness.

3. Training Embeddings

Embeddings can be:

- Pretrained on large corpora (e.g., Word2Vec, GloVe, FastText)

- Learned as part of model training (e.g., BERT, GPT embeddings)

Pretrained embeddings are often used to initialize models, saving time and improving performance.

Types of Embeddings in NLP

1. Word Embeddings

Each word gets a fixed vector. Examples:

- Word2Vec

- GloVe

2. Contextual Embeddings

These change depending on context. Examples:

- BERT

- ELMo

3. Sentence and Document Embeddings

Vectors representing entire sentences or documents. Useful for tasks like:

- Semantic search

- Text similarity

Applications of Embeddings

- Text classification

- Machine translation

- Question answering

- Chatbots and conversational AI

Embeddings enable models to generalize and perform better across tasks.

Internal and External Resources

Explore vector storage in our guide: [Vector Database for LLM]

Further reading:

Conclusion

Understanding how embeddings work in NLP helps you grasp the foundation of language models. By converting language into numbers, embeddings allow machines to reason, search, and respond intelligently. They power everything from search engines to AI assistants.

CTA: Want to build smarter NLP systems? Learn more about embeddings and try out pretrained models today!

FAQ: How Embeddings Work in NLP

What is an embedding in NLP?

An embedding is a vector representation of text that captures its meaning for use in machine learning.

How are embeddings created?

They are trained on large corpora using models like Word2Vec, GloVe, or transformers like BERT.

Why are embeddings important?

They enable NLP systems to understand and manipulate text in a way that captures semantic relationships.

Leave a Reply