Understanding the LLM fine tuning method is essential for customizing large language models for domain-specific tasks. Fine-tuning refines a pretrained model using targeted data, improving performance and relevance in real-world applications.

What Is LLM Fine Tuning?

LLM fine tuning refers to the process of adapting a general-purpose language model to a specific task or domain. This involves additional training on a smaller, labeled dataset.

Why Fine Tune an LLM?

- Domain adaptation: Make models perform better in legal, medical, or technical fields

- Task specificity: Improve accuracy for classification, translation, summarization, etc.

- Performance enhancement: Boost output quality beyond generic capabilities

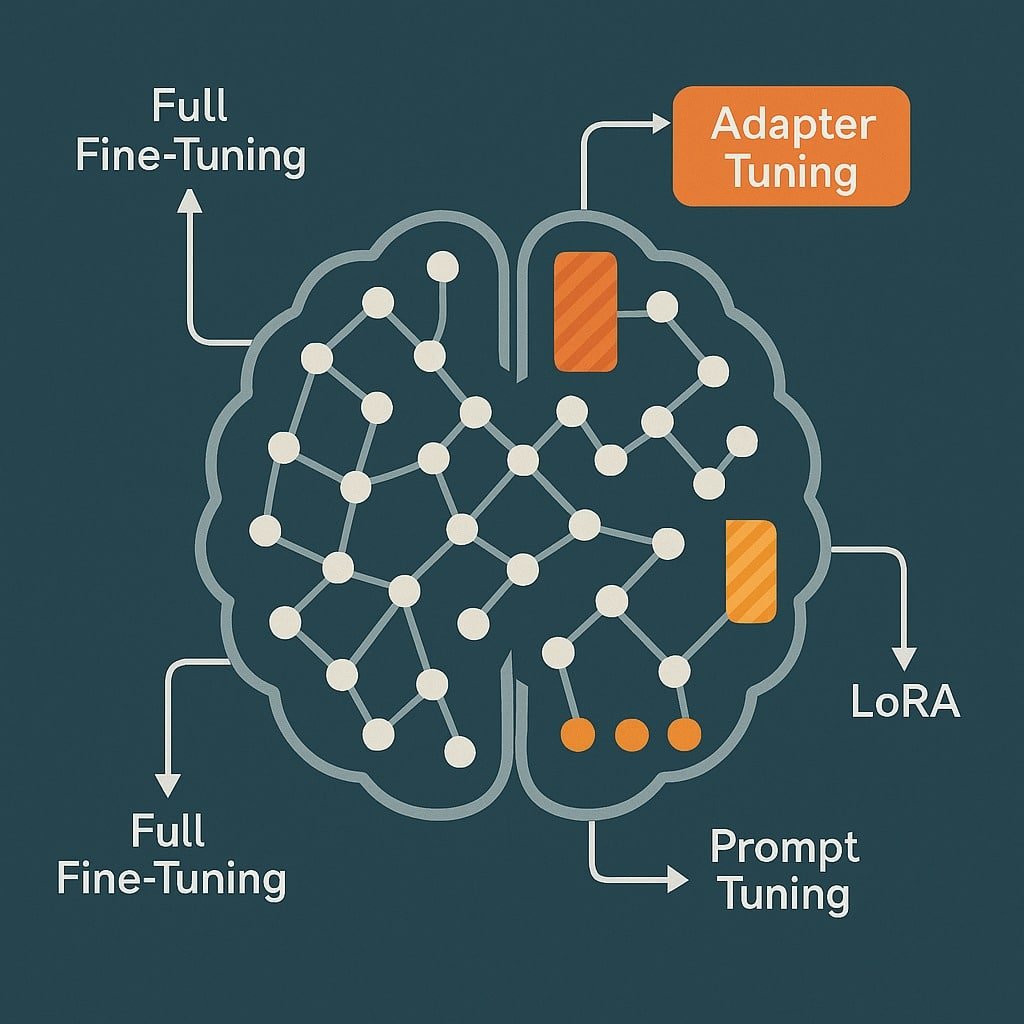

Key LLM Fine Tuning Methods

1. Full Fine Tuning

- Entire model is retrained on new data

- High computational cost

- Best for extensive customization

2. Parameter-Efficient Fine Tuning (PEFT)

Includes methods that reduce the number of trainable parameters:

- LoRA (Low-Rank Adaptation): Injects small trainable matrices

- Adapters: Adds small layers between model layers

- Prefix Tuning: Prepends trainable tokens to input

These methods require less compute and storage.

3. Instruction Tuning

Model is fine-tuned on a diverse set of prompts and instructions, enhancing zero-shot and few-shot learning.

Steps in LLM Fine Tuning

- Data collection: Task-specific, labeled data

- Preprocessing: Tokenization, formatting, and quality checks

- Training: Using chosen fine tuning method

- Evaluation: Performance metrics on validation set

- Deployment: Use in production environment

Real-World Use Cases

- Customer support: Chatbots tuned to specific industries

- Healthcare: Language models for clinical summarization

- Finance: Tailored models for report analysis and predictions

Internal and External Resources

Learn how LLMs are trained: [How LLMs Are Trained]

Explore more:

Conclusion

The LLM fine tuning method is a powerful way to tailor AI models for specific needs. Whether you’re building a healthcare assistant or a legal advisor bot, fine tuning ensures your LLM performs with precision and relevance.

CTA: Interested in customizing LLMs for your business? Start exploring fine tuning frameworks today!

FAQ: LLM Fine Tuning Method

What is fine tuning in LLMs?

Fine tuning adapts a pretrained LLM to perform better on a specific task using additional labeled data.

What are parameter-efficient fine tuning methods?

They are techniques like LoRA and Adapters that minimize resource use while achieving customization.

Is fine tuning always necessary?

No. For general tasks, pretrained models may suffice, but fine tuning enhances domain performance significantly.

Leave a Reply